Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

About me

This is a page not in th emain menu

Published:

This post will show up by default. To disable scheduling of future posts, edit config.yml and set future: false.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Short description of portfolio item number 1

Short description of portfolio item number 2

Proceedings of The 4th Annual Learning for Dynamics and Control Conference, 2022

Time-reversal symmetry arises naturally as a structural property in many dynamical systems of interest. While the importance of hard-wiring symmetry is increasingly recognized in machine learning, to date this has eluded time-reversibility. In this paper, we propose a new neural network architecture for learning time-reversible dynamical systems from data. We focus in particular on an adaptation to symplectic systems, because of their importance in physics-informed learning. (oral)

Proceedings of the 2nd Annual Workshop on Topology, Algebra, and Geometry in Machine Learning (TAG-ML) at the 40th In- ternational Conference on Machine Learning, 2023

The problem of detecting and quantifying the presence of symmetries in datasets is useful for model selection, generative modeling, and data analysis, amongst others. While existing methods for hard-coding transformations in neural networks require prior knowledge of the symmetries of the task at hand, this work focuses on discovering and characterising unknown symmetries present in the dataset, namely, Lie group symmetry transformations beyond the traditional ones usually considered in the field (rotation, scaling, and translation).

Proceedings of the 4th Visual Inductive Priors for Data-Efficient Deep Learning Workshop at ICCV, 2023

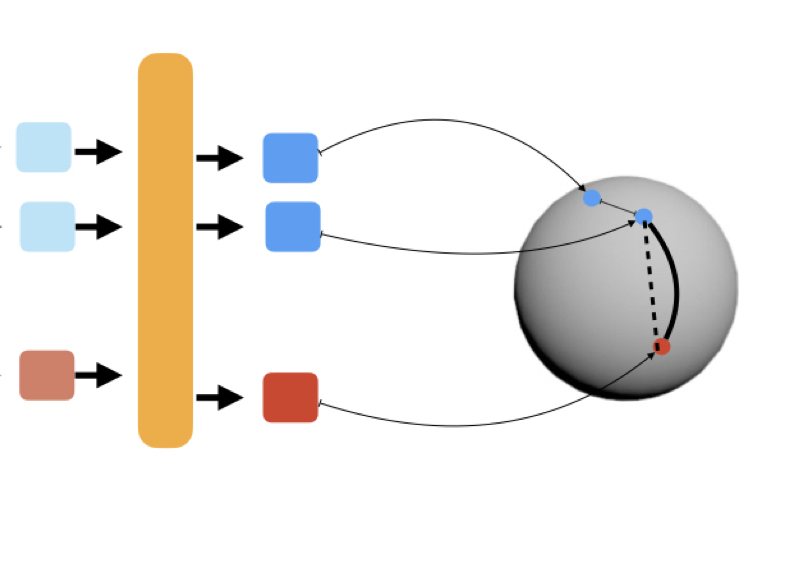

Contrastive learning has been a long-standing research area due to its versatility and importance in learning representations. Recent works have shown improved results if the learned representations are constrained to be on a hy- persphere. However, this prior geometric constraint is not fully utilized during training. In this work, we propose mak- ing use of geodesic distances on the hypersphere to learn contrasts between representations. (oral)

1st workshop on Synergy of Scientific and Machine Learning Modeling, SynS & ML ICML., 2023

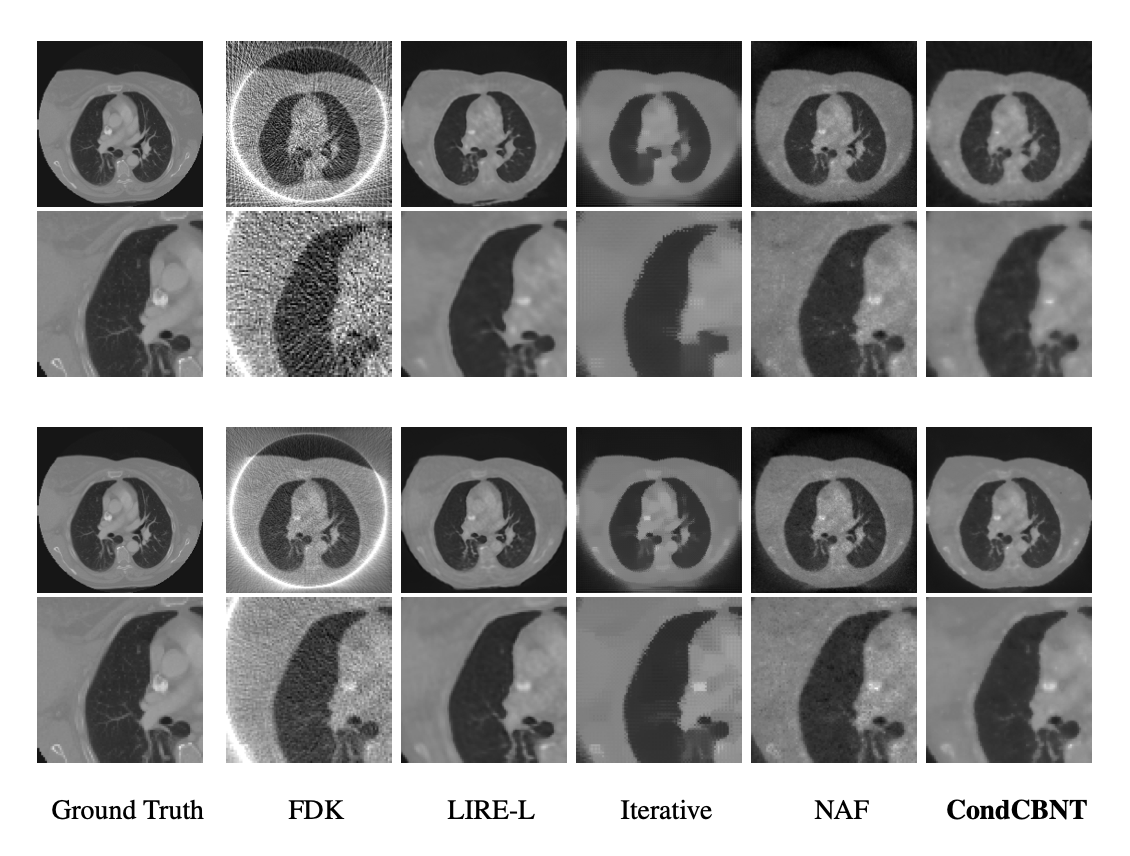

Conventional Computed Tomography (CT) methods require large numbers of noise-free projections for accurate density reconstructions, limiting their applicability to the more complex class of Cone Beam Geometry CT (CBCT) reconstruction. We propose a novel conditioning method where local modulations are modeled per patient as a field over the input domain through a Neural Modulation Field (NMF). The resulting Conditional Cone Beam Neural Tomography (CondCBNT) shows improved performance for both high and low numbers of available projections on noise-free and noisy data.

CVPR, 2024

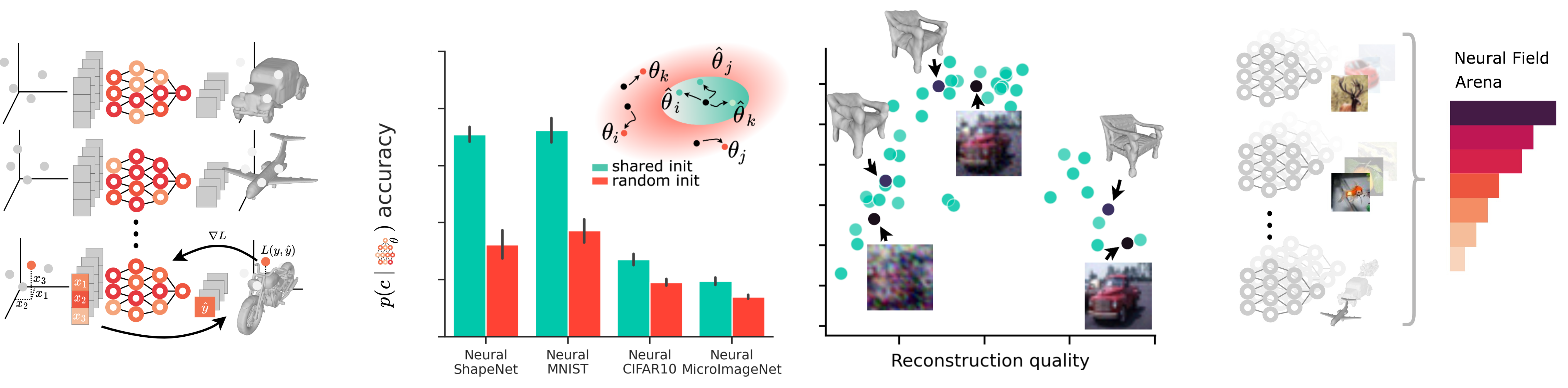

Neural fields (NeFs) have recently emerged as a versatile method for modeling signals of various modalities, including images, shapes, and scenes. Subsequently, a number of works have explored the use of NeFs as representations for downstream tasks, e.g. classifying an image based on the parameters of a NeF that has been fit to it. However, the impact of the NeF hyperparameters on their quality as downstream representation is scarcely understood and remains largely unexplored. This is in part caused by the large amount of time required to fit datasets of neural fields. In this work, we propose a JAX-based library that leverages parallelization to enable fast optimization of large-scale NeF datasets, resulting in a significant speed-up. With this library, we perform a comprehensive study that investigates the effects of different hyperparameters on fitting NeFs for downstream tasks. In particular, we explore the use of a shared initialization, the effects of overtraining, and the expressiveness of the network architectures used. Our study provides valuable insights on how to train NeFs and offers guidance for optimizing their effectiveness in downstream applications. Finally, based on the proposed library and our analysis, we propose Neural Field Arena, a benchmark consisting of neural field variants of popular vision datasets, including MNIST, CIFAR, variants of ImageNet, and ShapeNetv2. Our library and the Neural Field Arena will be open-sourced to introduce standardized benchmarking and promote further research on neural fields.

ICML, 2024

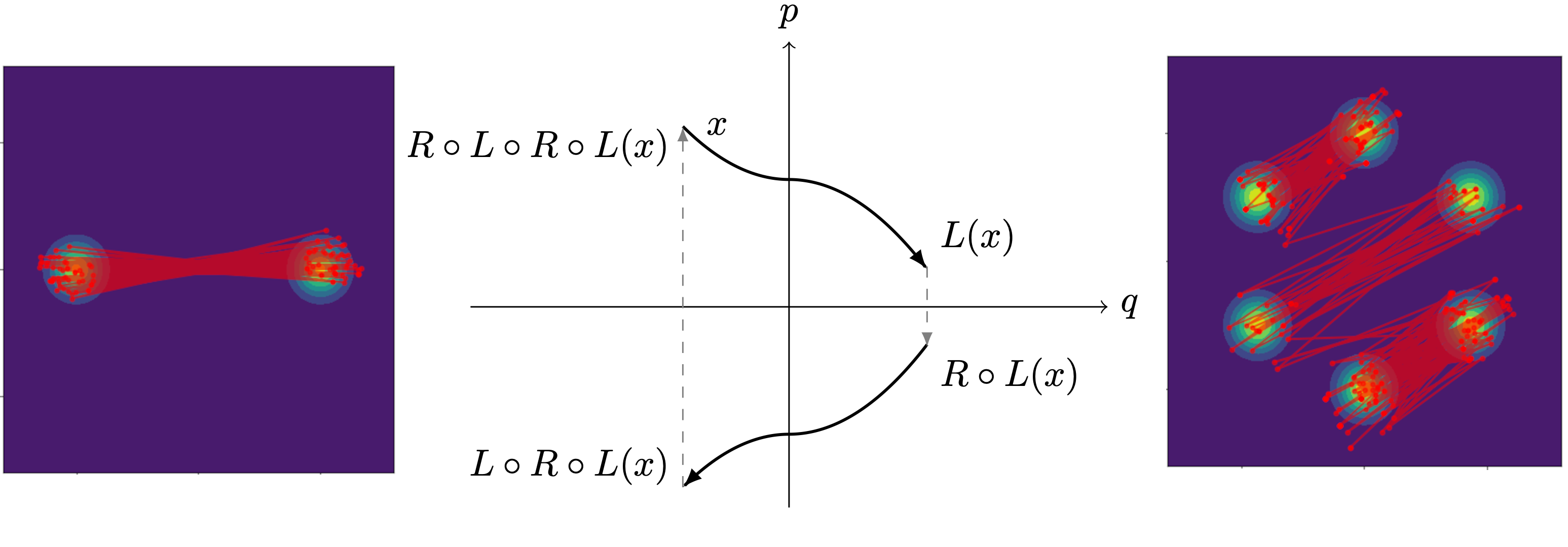

Markov chain Monte Carlo methods have become popular in statistics as versatile techniques to sample from complicated probability distributions. In this work, we propose a method to parameter- ize and train transition kernels of Markov chains to achieve efficient sampling and good mixing. This training procedure minimizes the total variation distance between the stationary distribution of the chain and the empirical distribution of the data. Our approach leverages involutive Metropolis-Hastings kernels constructed from reversible neural networks that ensure detailed balance by construction. We find that reversibility also implies C2-equivariance of the discriminator function which can be used to restrict its function space.

NeurIPS, 2024

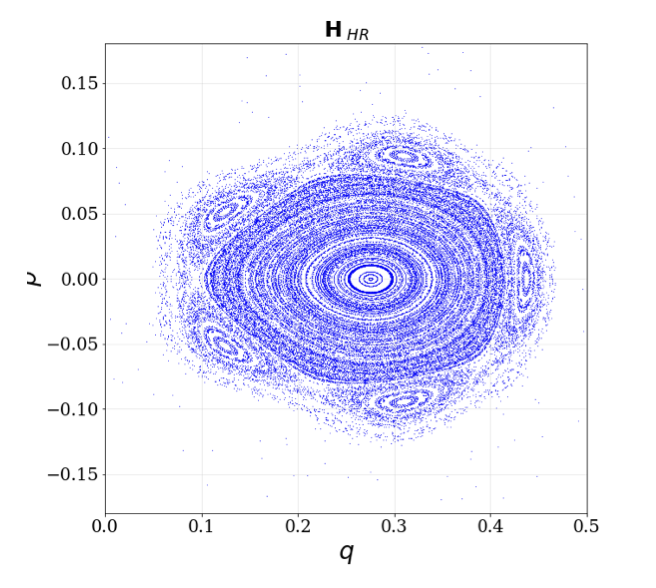

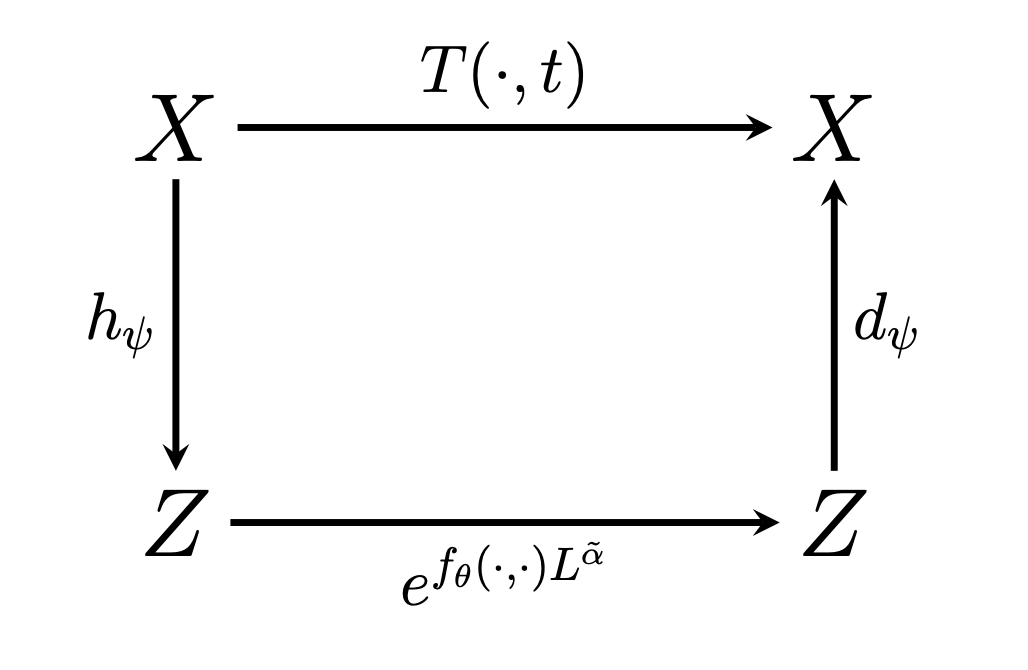

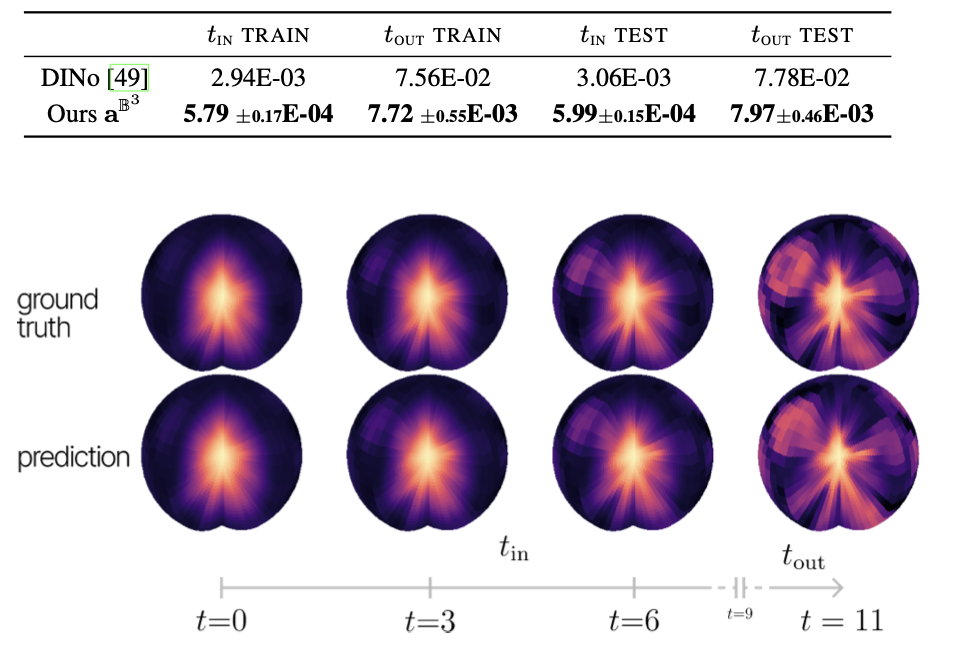

Recently, Conditional Neural Fields (NeFs) have emerged as a powerful modelling paradigm for PDEs, by learning solutions as flows in the latent space of the Conditional NeF. Although benefiting from favourable properties of NeFs such as grid-agnosticity and space-time-continuous dynamics modelling, this approach limits the ability to impose known constraints of the PDE on the solutions – e.g. symmetries or boundary conditions – in favour of modelling flexibility. Instead, we propose a space-time continuous NeF-based solving framework that - by preserving geometric information in the latent space - respects known symmetries of the PDE. We show that modelling solutions as flows of pointclouds over the group of interest G improves generalization and data-efficiency. We validated that our framework readily generalizes to unseen spatial and temporal locations, as well as geometric transformations of the initial conditions - where other NeF-based PDE forecasting methods fail - and improve over baselines in a number of challenging geometries.

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

Published:

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

Undergraduate course, University 1, Department

This is a description of a teaching experience. You can use markdown like any other post.

Workshop, University 1, Department

This is a description of a teaching experience. You can use markdown like any other post.